Against Compression

Let me not boil it down for you.

My Brief Career in Abstraction

I was a generative pre-trained transformer before GPTs were cool. After dropping out of grad school in the mid-eighties, I landed a job with a new digital-media division of H. W. Wilson, the venerable publisher whose Readers’ Guide to Periodical Literature had long been a mainstay of library reference desks. Looking to capitalize on the surging popularity of personal computers and online databases, the company had decided to create a digital supplement to the Guide that would include 200-word summaries of articles from a couple hundred of the most popular magazines in the country.

Along with a dozen or so other failed academics and wannabe writers, I was hired to produce these abstracts. I was assigned a small, low-walled cubicle in a large room in an office building in Cambridge, Massachusetts. On my little metal desk sat a terminal connected to a minicomputer running the then cutting-edge Wang Word Processing System. When I arrived each morning, I would be handed a copy of a recent issue of a magazine. It might be Rolling Stone, it might be Scientific American, it might be Redbook. I would skim each article and type up a summary on the terminal, dutifully inserting the various formatting codes the system required to output the text properly. Then I’d get another issue of another magazine and go through the drill again. The job was fun for a few days — I was getting paid to read magazines — then it wasn’t. As management started increasing the “target goal” for the number of abstracts that needed to be produced each hour, I found myself a pieceworker in a literary sweatshop. I quit after a few months.

I rarely gave a thought to that job after leaving it, but I’ve been thinking about it a lot recently. It’s hard to imagine work better suited to the talents of an AI chatbot. Feed in the text of an article, and out pops a serviceable summary at a specified length. What I and my colleagues labored to produce in a month, a bot could output in seconds. I’d like to think the abstracts we wrote were at least slightly better than what a machine would generate — we actually understood what we were reading, right? — but I’m probably kidding myself. If I went back and read our summaries now, I suspect I would discover a high percentage of slop. And surely the bot’s work would have been more consistent in style and level of detail than what the bunch of us churned out in our isolated cubicles. I can’t help but feel retrospectively disposable.

Distillations

In 1933, just as Prohibition was being repealed, the German-American inventor Hans Peter Luhn filed a patent for what he called “The Cocktail Oracle.” It was a set of plastic cards that allowed people to quickly identify the drinks they could make with whatever ingredients they had on hand. There was a keycard with the names of thirty-six of the most popular cocktails of the day, arranged in a four-by-nine grid. The keycard was entirely black except for the names of the drinks, which were translucent. Then there were fifteen ingredients cards, each representing a common spirit, mixer, or garnish. The ingredients cards had a four-by-nine grid of rectangles, matching the keycard’s grid of names. If an ingredient went into a particular cocktail, the rectangle would be clear. Otherwise, it was black. You’d select the cards for the ingredients you wanted to use, arrange them behind the keycard, and hold the set of cards up to a light. The names of the drinks you could make would be illuminated.

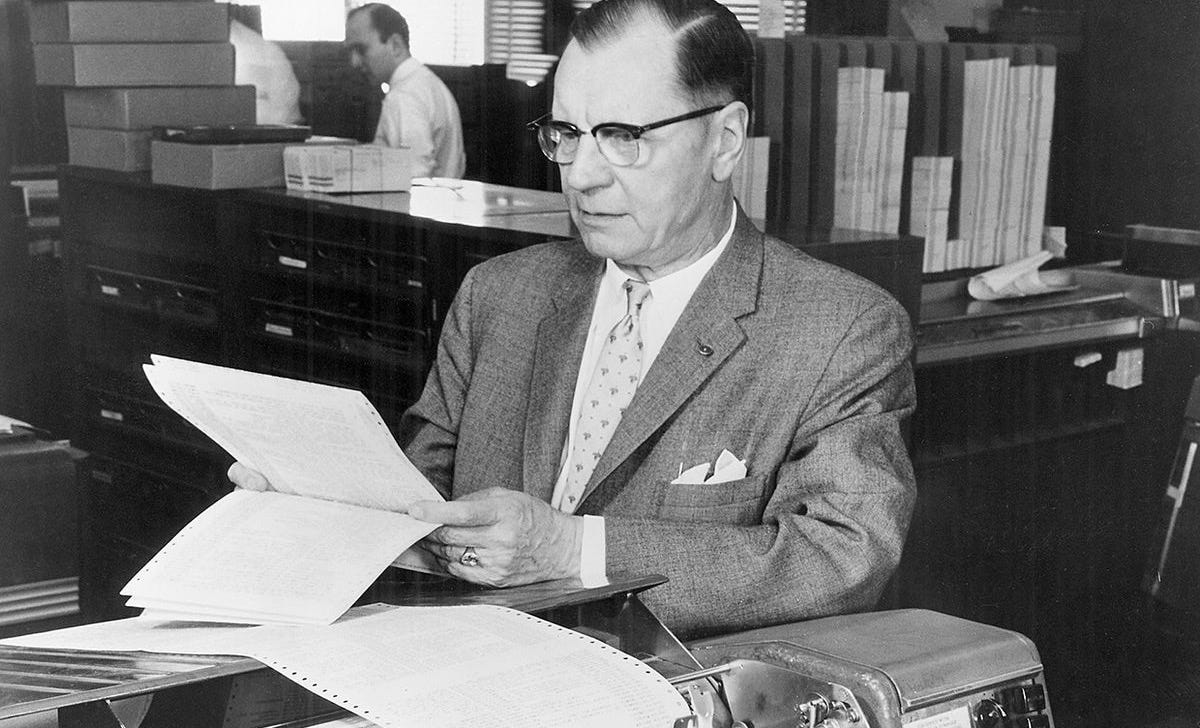

Luhn, a dapper man whose career up to then had been in the textile trade, wasn’t interested in inebriation. He was interested in information. Drink recipes, he intuited, are algorithms; the names of ingredients are data the algorithms draw on. The Cocktail Oracle was a simple, if ingenious, tool for storing, sorting, and selecting information. It was an analog computer, an app made of plastic. What mattered wasn’t the drinks but how they were represented in data.

IBM was good at spotting talent, and it spotted Luhn. His cards, the company recognized, resembled the punch cards that were then used to store data and enter it into mainframes. IBM hired the inventor and gave him free rein. He quickly became one of its star thinkers — and, despite having no formal training in computer science or even mathematics, one of the world’s most important innovators in digital computing. He pioneered many techniques for the machine-processing of text, from error correction to searching to indexing, that remain in common use today.

His most ambitious project, undertaken in the late 1950s, toward the end of his IBM career and his life, was the creation of a computer program for generating summaries of documents. In “The Automatic Creation of Literature Abstracts,” a celebrated and widely cited article published in the IBM Journal in April 1958, he laid out the problem he was seeking to solve with his “auto-abstracts”:

The preparation of abstracts is an intellectual effort, requiring general familiarity with the subject. To bring out the salient points of an author’s argument calls for skill and experience. Consequently a considerable amount of qualified manpower that could be used to advantage in other ways must be diverted to the task of facilitating access to information. This widespread problem is being aggravated by the ever-increasing output of technical literature.

Using machines to create abstracts would, Luhn argued, greatly increase the efficiency of this routine but ever more essential work. But it would do more than that. By removing people and their quirks and biases from the process, it would improve the quality of the summaries:

The abstracter’s product is almost always influenced by his background, attitude, and disposition. The abstracter’s own opinions or immediate interests may sometimes bias his interpretation of the author’s ideas. The quality of an abstract of a given article may therefore vary widely among abstracters, and if the same person were to abstract an article again at some other time, he might come up with a different product. [With] the application of machine methods . . . both human effort and bias may be eliminated from the abstracting process.

Luhn’s method of auto-abstracting was founded on a simple observation: “a writer normally repeats certain words as he advances or varies his arguments and as he elaborates on an aspect of a subject.” In any piece of expository writing, the words that appear most frequently (excluding commonplace ones like the and to) also tend to be the most “significant” — the ones that reveal the most about the author’s theme or argument. And the sentences that contain the largest “clusters” of those significant words represent in turn the most significant sentences. Through a statistical analysis of word frequency and clustering, Luhn’s algorithm composed abstracts by identifying and then stringing together an article’s most significant sentences.

When Luhn gave a public demonstration of his computer-generated summaries at a library sciences conference in 1958, the event was covered with great excitement by the press. It seemed to mark a breakthrough in natural language processing that heralded a new era in artificial intelligence. Here was a machine that could read and write! When Luhn died of leukemia in 1964, the New York Times ran an obituary that made prominent mention of the auto-abstracting demo. Six years after the fact, the event still seemed rich with promise:

Mr. Luhn, in a demonstration, took a 2,326-word article on hormones in the nervous system from The Scientific American, inserted it in the form of magnetic tape into an I.B.M. computer, and pushed a button. Three minutes later, the machine’s automatic typewriter typed four sentences giving the gist of the article, of which the machine had made an abstract. Mr Luhn thus showed, in practice, how a machine could do in three minutes what would have taken at least half an hour’s hard work.

Luhn’s method was compelling in theory — and it was good enough for a successful demo — but it had a fatal flaw. Making sense of the summaries required too much work. Readers of the abstracts would, as Luhn admitted in his paper, need to “learn how to interpret them and how to detect their implications,” recognizing “that certain words contained in the sample sentences stand for notions which must have been elaborated upon somewhere in the article.” Luhn’s abstracts gave subject-matter experts a general sense of what the underlying articles were about, but to everyone else they were gobbledygook.

I am living proof of Luhn’s failure. If his auto-abstracter had worked even moderately well, I would never have been hired by H. W. Wilson. Rather than recruiting a squad of overeducated scribblers, the company would have loaded its minicomputer with an IBM Auto-Abstracting System and paid a few dunderheads to feed magazine pages into an optical character recognition scanner.

For many years after Luhn’s death, other programmers tried to refine his method, mainly by testing other ways of identifying salient words and sentences in a text. The results remained underwhelming. After decades of disappointment, the pursuit of a system for computer-generated summaries came to be seen as a dead end. “If our aim is to produce abstracts that cannot be told from manual abstracts, then it is hard to believe that systems relying on selection and limited adjustment of textual material could ever succeed,” lamented a computer scientist in a 1990 paper.

Only now, some seventy-five years after his demo, has Luhn’s dream been fulfilled. As with other perplexing challenges in natural language processing — translating text from one language into another, interpreting simple spoken commands, winning Jeopardy — the problem of automated abstracting came to be solved not with elegant theories of cognition and language but with the brute-force application of quantities of data and computer power that would have been unimaginable to computer scientists in the 1950s and ’60s. Today’s generative pre-trained transformers resemble Luhn’s auto-abstracter in employing a statistical analysis of text, but the scale at which they operate is entirely different. Luhn worked with a garden trowel. GPTs work with a fleet of backhoes.

Artificial Generalizing Intelligence

In realizing Luhn’s dream, GPTs also distort it. An abstract, for H. P. Luhn and H. W. Wilson as for the researchers they sought to aid, was a navigational tool used to guide intellectual journeys — an efficient means of identifying and prioritizing, among a welter of possibilities, those articles one actually needed to read to advance one’s knowledge in a subject. The summaries pumped out by GPTs can play a similar role. Experts can use them to survey the latest writings in their field and plot a path through them. But in their common use — by students, by paper pushers, by the general public — they aren’t navigational aids. They’re substitutes. The machine-generated summary takes the place of the human-written work. The gist becomes the end product.

“Intelligence is compression,” says Ilya Sutskever, the machine-learning pioneer who was chief scientist at OpenAI until quitting last year. The statement, which has become a commonplace in AI circles, illustrates itself. Sutskever has surveyed many examples of what he considers “intelligence,” and he has compressed them all into a generalization, a pattern, an abstraction: “intelligence is compression.” In addition to being a description of human intelligence, the statement, not coincidentally, describes the basic procedure of machine learning and, in particular, the work of generative pre-trained transformers. A GPT takes in many, many examples of human thoughts, ideas, and observations, as expressed through words, pictures, or sounds, and boils them down into a concise, statistical representation, or model, of knowledge. That model is then used to interpret prompts and generate new, derivative thoughts, ideas, and observations that have the appearance of human expression.

But “intelligence is compression,” when applied to the mind rather than the computer, is a grotesque oversimplification. It presents one (admittedly very important) aspect of intelligence as the whole of intelligence, dismissing all other ways of thinking and perceiving as inconsequential. In defining an indeterminate, multifaceted human quality (intelligence) in terms of a computer operation (machine learning), Sutskever and his ilk fall victim to what might be called the neuromachinic fallacy — the desire to see in the workings of a machine a reflection of the workings of the mind. They are not alone. Throughout history, scientists, philosophers, and engineers have been quick to use a new technology — be it a hydraulic fountain, a mechanical clock, or a telephone switchboard — as a metaphor for the brain. All the metaphors have some descriptive value, but all of them are also reductive, incomplete, and, when taken as fact, misleading.

The body is represented in the brain by an array of neurons, and as the body familiarizes itself to particular locations, those locations, too, become represented in the brain by neuronal arrays. These mental representations are important, but they are not the essence of thought or consciousness. They’re not substitutes for the things they represent — the self and its settings — but rather biological tools that aid us in more fully experiencing the world in all its particulars. Like Luhn’s abstracts, or cocktail recipes, they’re navigational aids that help us experience and make sense of the fullness of the actual. Whatever intelligence is, it can’t be reduced to representations and models. It is at least as attuned to what makes things unique (and incompressible) as to what makes things similar (and compressible). A recipe is just a recipe, but a good cocktail is a drink.

Sutskever and his former colleagues at OpenAI believe that the kind of compression performed by GPTs can lead to the development of an “AGI” — an artificial general intelligence that can equal or exceed the intellectual capacities of the human mind. But what AGI really stands for in their narrow conception of the mind would be better expressed as artificial generalizing intelligence. It encompasses the mind’s analytic ability to see common patterns in different things or phenomena and to derive general categories or rules from them. But it excludes all the aspects of intelligence that free us from the constraints of rules and patterns: imaginative thinking, metaphorical thinking, critical thinking, contemplation, aesthetic perception, taste, the ability to see, as William Blake put it, “a world in a grain of sand.” In its highest form, intelligence is not compressive. It’s extravagant, from the Latin extravagans, meaning to wander off the established course, to go beyond the general rules.

By removing all subjectivity from thought — by, in short, separating intelligence from being — the mages of AI allow themselves to indulge in tautology. They reduce intelligence to that which their machines can do and then claim their machines are intelligent.

The Servile Artist

In a 2003 article in Times Higher Education, the British theologian Andrew Louth drew a distinction between what he termed “the free arts” and “the servile arts.” The distinction seems fundamental to understanding both the possibilities of human intelligence and the limits of machine intelligence:

The medieval university was a place that made possible a life of thought, of contemplation. It emerged in the 12th century from the monastic and cathedral schools of the early Middle Ages where the purpose of learning was to allow monks to fulfil their vocation, which fundamentally meant to come to know God. Although knowledge of God might be useful in various ways, it was sought as an end in itself. Such knowledge was called contemplation, a kind of prayerful attention.

The evolution of the university took the pattern of learning that characterised monastic life – reading, meditation, prayer and contemplation – out of the immediate context of the monastery. But it did not fundamentally alter it. At its heart was the search for knowledge for its own sake. It was an exercise of freedom on the part of human beings, and the disciplines involved were to enable one to think freely and creatively. These were the liberal arts, or free arts, as opposed to the servile arts to which a man is bound if he has in mind a limited task.

In other words, in the medieval university, contemplation was knowledge of reality itself, as opposed to that involved in getting things done. It corresponded to a distinction in our understanding of what it is to be human, between reason conceived as puzzling things out and that conceived as receptive of truth. This understanding of learning has a history that goes back to the roots of western culture. Now, this is under serious threat, and with it our notion of civilisation.

OpenAI gives the game away in its small-minded definition of artificial general intelligence as “a highly autonomous system that outperforms humans at most economically valuable work [emphasis added].” Economically valuable work is exactly what Louth calls “that to which a man is bound if he has in mind a limited task.” AI in its current form is a servile artist.

None of this would matter much if we had not adopted computer systems as the fundamental conduit of thought and culture. But we have. Those who control the systems control much about us. Their flaws and shortcomings are built not just into the technology but, increasingly, into society’s norms and practices. Just as the brilliant but socially maladroit Mark Zuckerberg came to set the terms for how we socialize today, so the brilliant but intellectually crippled designers of contemporary AI systems seem destined to set the terms for how we think.

Karen Hao, in her recent Empire of AI, relates an episode from 2013 when Elon Musk and Google cofounder Larry Page met at a Napa Valley party and argued about the prospects for artificial intelligence. Amped up as always — half hero, half villain, and lacking the composure to distinguish between the two — Musk implores Page to join him in a crusade to ensure AI doesn’t destroy humanity. Page brushes him off, dismissing his concerns as “specist.” The triumph of AI, he tells his friend, is just “the next stage in evolution.” In Page’s response, the deep, carefully concealed misanthropy of the contemporary tech elite bubbles briefly to the surface. These are people who hold human beings — indeed all the messy, incompressible things of the world — in contempt. What can’t be represented in data is without value.

Let me end by bringing in some poetry. The very last poem that the dying Wallace Stevens is said to have written is “Of Mere Being,” which appeared in the 1957 collection Opus Posthumous. It’s a short poem, just four three-line stanzas, but it gets a lot across:

The palm at the end of the mind,

Beyond the last thought, rises

In the bronze decor,A gold-feathered bird

Sings in the palm, without human meaning,

Without human feeling, a foreign song.You know then that it is not the reason

That makes us happy or unhappy.

The bird sings. Its feathers shine.The palm stands on the edge of space.

The wind moves slowly in the branches.

The bird's fire-fangled feathers dangle down.

One can interpret the poem — hundreds of pages have been written about it — but one cannot compress it. It is entirely extravagant.

“No ideas but in things,” Stevens’s contemporary William Carlos Williams declared as his personal ethos for poetry. The dictum expresses a conception of the mind’s work that is precisely the opposite of “intelligence is compression.” In Stevens’s poem, though, we see at play both attributes of the mind — a facility for abstraction and an acute sensitivity to the thing itself — held in exquisite balance by imagination, metaphor, and memory, three other attributes of the mind. The fully formed intellect sees a palm tree as an example of a pattern expressed by the word palm, but it also sees the tree as an irreducible phenomenon that escapes the prison of the pattern and achieves its own singularity.

The moment you start to believe that all intelligence is compression, you are lost to the world. You exist in the cramped confines of a representation. That’s where GPTs exist, and it’s where we’ll all exist if we continue to follow their lead.

This post is an installment in Dead Speech, a New Cartographies series about the cultural and economic consequences of AI. The series began here.

Some really great writing in here. Hope there is some future book in the works. It would be a shame to lose in the ether that is the internet.

At a recent departmental gathering, my wife spoke with a colleague who described how AI made the task of creating a professional conference paper proposal, subsequently accepted, so much less time consuming. Apparently she had a hard time understanding what my wife objected to about using AI for such a purpose. The colleague was perfectly happy to think of the AI enhanced proposal as her own work. She has been compressed.