Epistemological Slop

Lies, damned lies, and Google.

“I said, Who put all those things in your head?” —Lennon/McCartney, “She Said She Said”

For a while now, I’ve been meaning to write something about the corruption of the Google search engine, which strikes me as one of the defining stories of the information age. This is not that story. But it’s related. As I was writing my last post about television and thinking about the various cultural responses to TV in the sixties and seventies, I started wondering whether the Beatles ever mentioned television in a song. Nothing sprung to mind, which seemed odd given how important TV was to the band’s career.

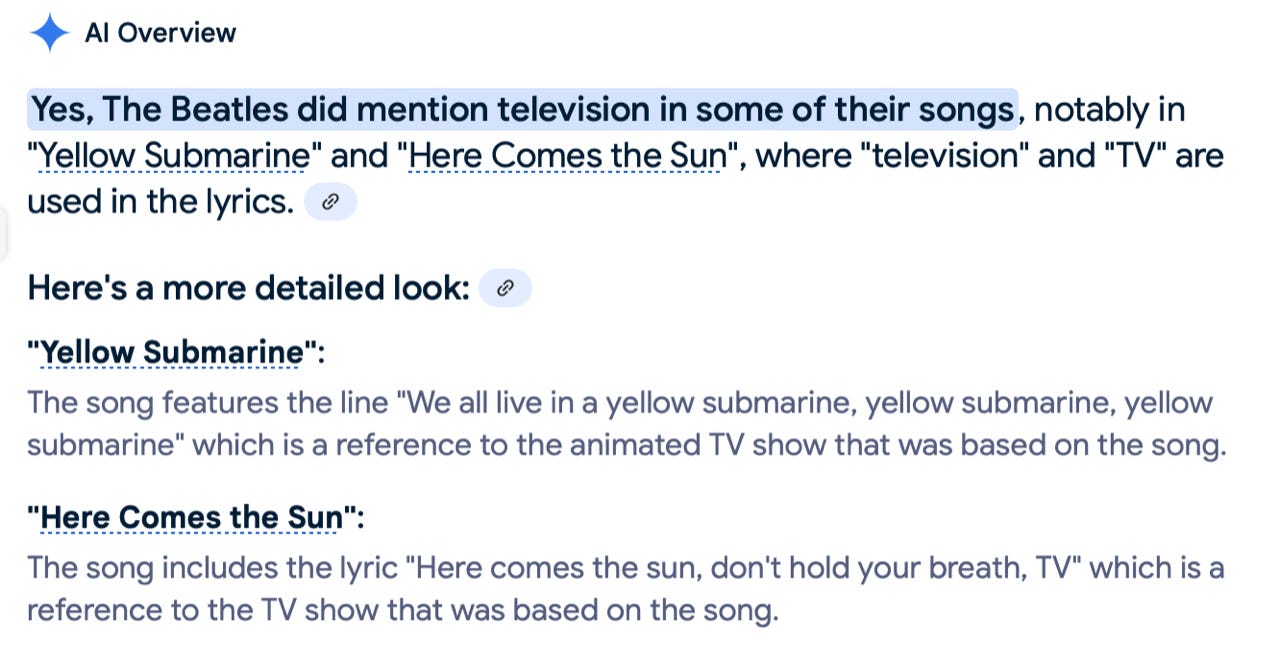

So I googled it. I asked the world’s default search engine, “Did the Beatles ever mention television in their songs?” Google, as is its practice these days, presented at the top of its results an answer from Gemini, its AI chatbot:

It would be hard to imagine three sentences more densely packed with falsehood than these three. It takes considerable effort just to parse the utter wrong-headedness of it all. First, with the confidence characteristic of chatbots, Gemini declares that, yes, indeed, the words “television” and “TV” appear in Beatles lyrics. It offers “Yellow Submarine” and “Here Comes the Sun” as prominent examples. That’s a baldfaced lie. Neither of those songs has either word in its lyrics. Neither has anything whatsoever to do with TV.

Then, in the “more detailed look,” things really get weird. Gemini sort of fesses up to lying about “Yellow Submarine.” The song’s lyrics, it implies, don’t actually contain “TV” or “television,” as it just asserted. But, it claims, there is a reference to TV in the song’s chorus. What is that reference? It’s a reference to a TV show that was based on the song. So “yellow submarine” in the song is a reference to a TV show that did not exist when the song was written. That’s a neat trick. And here’s the kicker: The animated Yellow Submarine that was based on the song was not a TV show. It was a movie.

The bit on “Here Comes the Sun” is another botch job. Claiming that the lyrics contain the line “Here comes the sun, don’t hold your breath, TV” is just plain ridiculous. And then there’s the same out-of-sequence claim that the song was based on a TV show that didn’t exist when the song was written. And, anyway, no TV show (or movie) was ever based on “Here Comes the Sun.”1

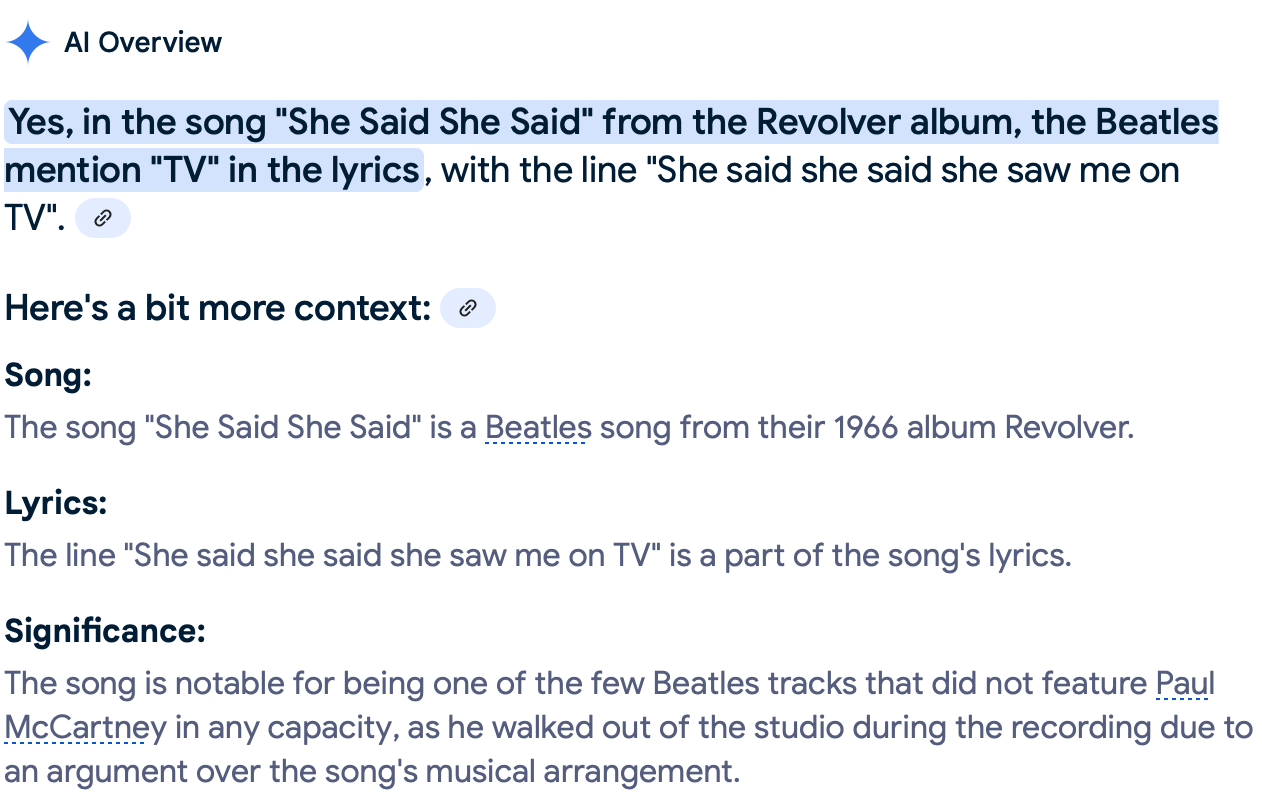

I gave the bot a chance to redeem itself. I asked Google a slight variation on the original query, replacing “television” with “TV.” Here’s what I got:

Same level of confidence, same level of balderdash. “She said she said she saw me on TV” is not in the lyrics to “She Said She Said.” (Though, I have to admit, it’s kind of funny that Gemini would hallucinate about a song that chronicles an acid trip.) And the “Significance” section is not only irrelevant to the query; it’s misleading. The question of whether Paul appears “in any capacity” on the track remains the subject of much controversy among Beatles experts.

There are two larger points that need to be made here. The first is that Gemini’s errors point to three fundamental and related problems in the workings of generative AI today: a lack of common sense, an inability to comprehend time (and hence cause and effect), and an obliviousness to logic. Toward the end of Superbloom, I quote the roboticist Rodney Brooks’s observation that large language models are “good at saying what an answer should sound like, which is different from what an answer should be.” The distinction is well illustrated in Gemini’s lucid but bizarre answers to my simple queries. And it calls into question the now widespread assumption that we’re about to see the arrival of a true Artificial General Intelligence. I think we’re going to discover that it’s impossible for a machine to replicate human intelligence if that machine lacks any experience in the world in which humans exist.

The second, more practical point is an ethical one. Although the importance of Google’s search engine has waned in recent years, it remains the world’s most important epistemological tool — the first place many people go to get answers to their questions and fill gaps in their knowledge. Google is now giving precedence in its search results to a chatbot that it knows is unreliable — that it knows spreads lies. That strikes me as being deeply unethical — and a sad testament to how far Google has fallen from its founding ideals.

This post is part of Dead Speech, the New Cartographies series on AI and its cultural and economic consequences.

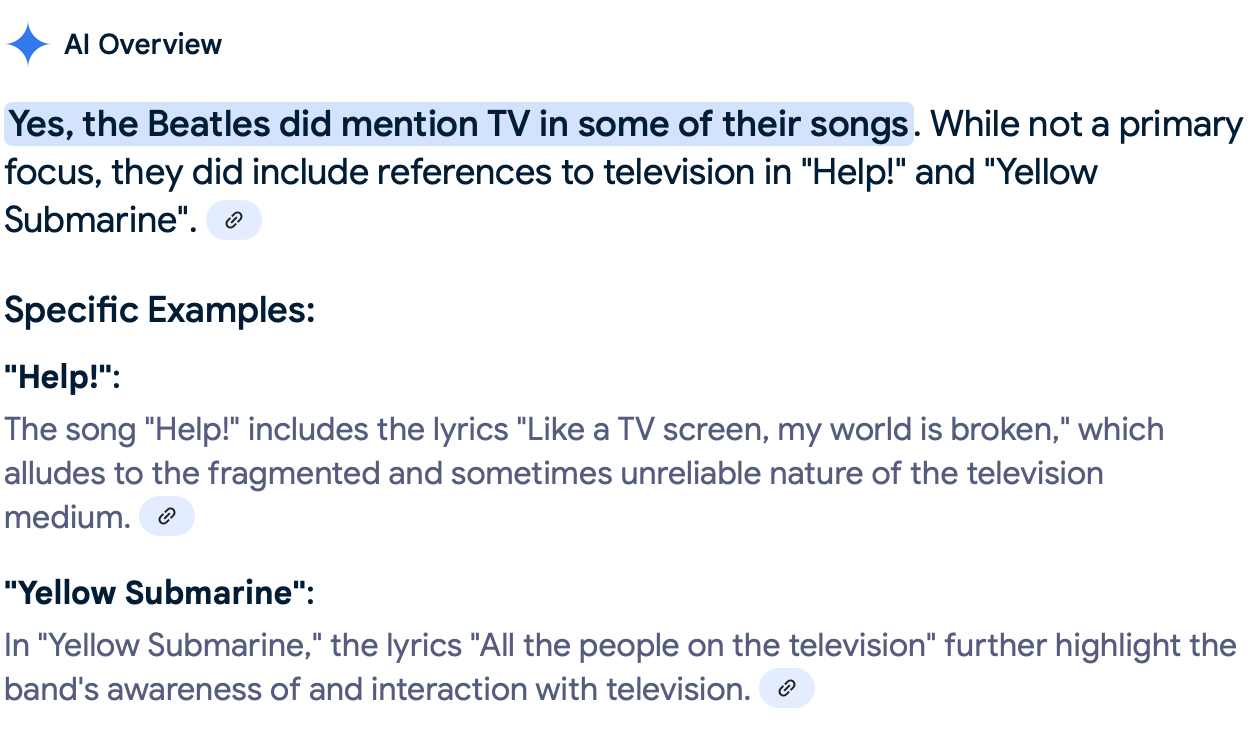

Addendum (4/12): Curious as to whether Gemini had read this post and wised up, I posed my question again. The reply:

Still lying like a rug. Not only has Gemini fabricated a new lyric for “Yellow Submarine,” it has added “Help!” to its list of garbled songs (with a dash of adolescent poetry: “Like a TV screen, my world is broken”). Google needs to teach Gemini the first rule of public discourse: When you find yourself in a hole, stop digging.

CBS recently launched a morning news show called “Here Comes the Sun.” I don’t think that counts.

OMG. This is terrible and crazy. I am trying to teach my kids that just because you Google a thing and find an answer, this does not mean it is correct. I am also trying, with far less success, to convince the average adult I encounter that this does not constitute "research." I fear the arrival of general artificial intelligence may arrive not so much because the machines get smarter but because the humans get more stupid.

My husband Peco and our daughter discussed exactly the same issue yesterday evening. They both noted that, not only did the AI chatbot offer up wrong answers, but seems to also have developed gaslighting skills. When asked why it provided the incorrect answers (to French grammar questions, findings of research papers, etc.), it painly denied ever having produced the original output.

The question is how many people will bother to fact-check an AI chatbot….